Content

- DCT Features

- Eigenfaces

- Appearance Models

- Visual Feature Efficacy

- Model Fusion

Audiovisual Processing CMP-6026A

Dr. David Greenwood

The main limitation of shape-only features is there is a lot of information missing.

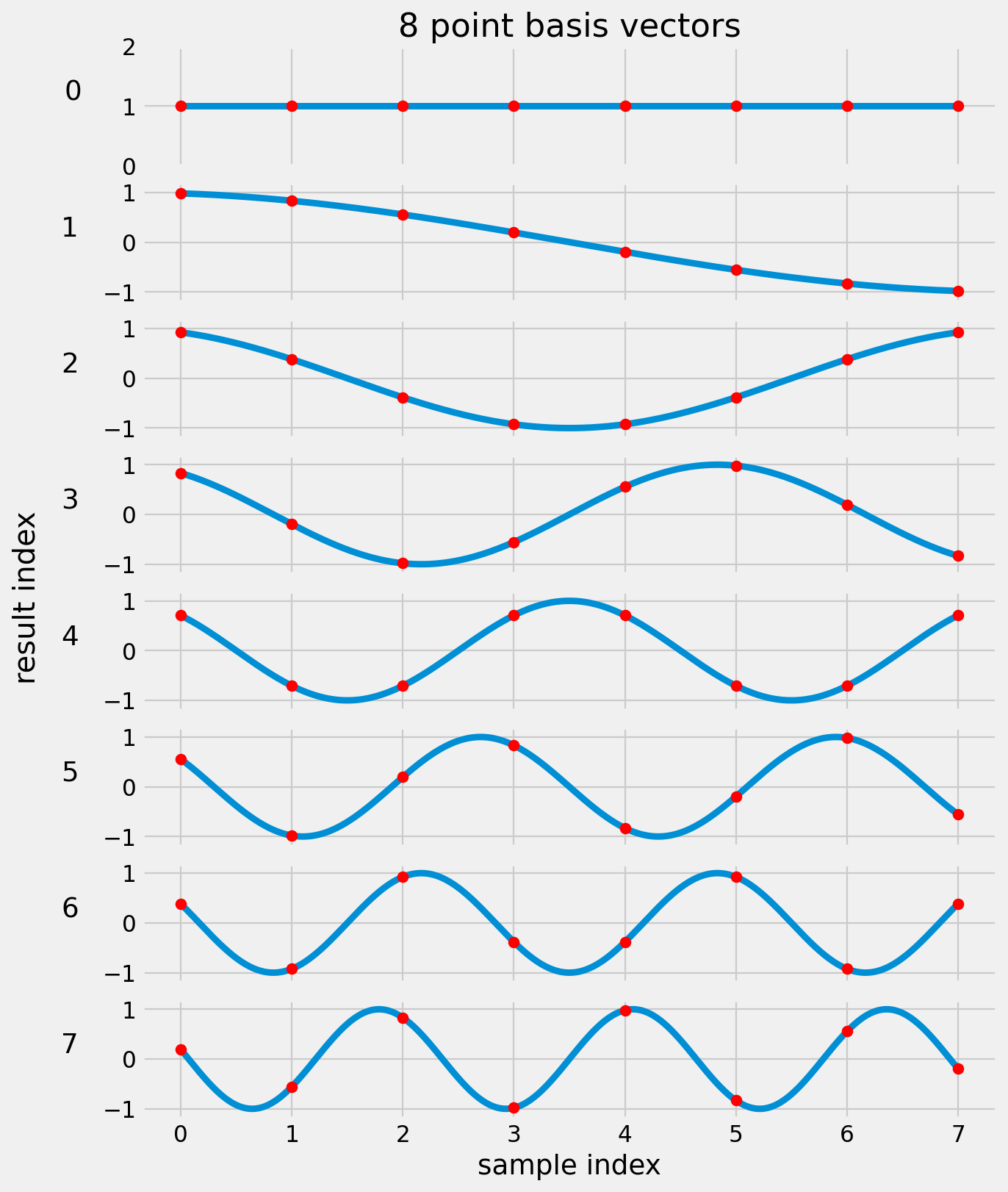

Performs a similar function to DFT in that it transforms a signal (or image) from the spatial domain to the frequency domain.

\[ X_k = s(k) ~ \sum_{n=0}^{N-1} x_n cos \left[ \frac{\pi k (2n + 1)}{2N} \right] \]

Where:

\[Y_k = cos \left[ \frac{\pi k (2n + 1)}{2N} \right]\]

\[n = 0, 1, 2, \dots, N-1\]

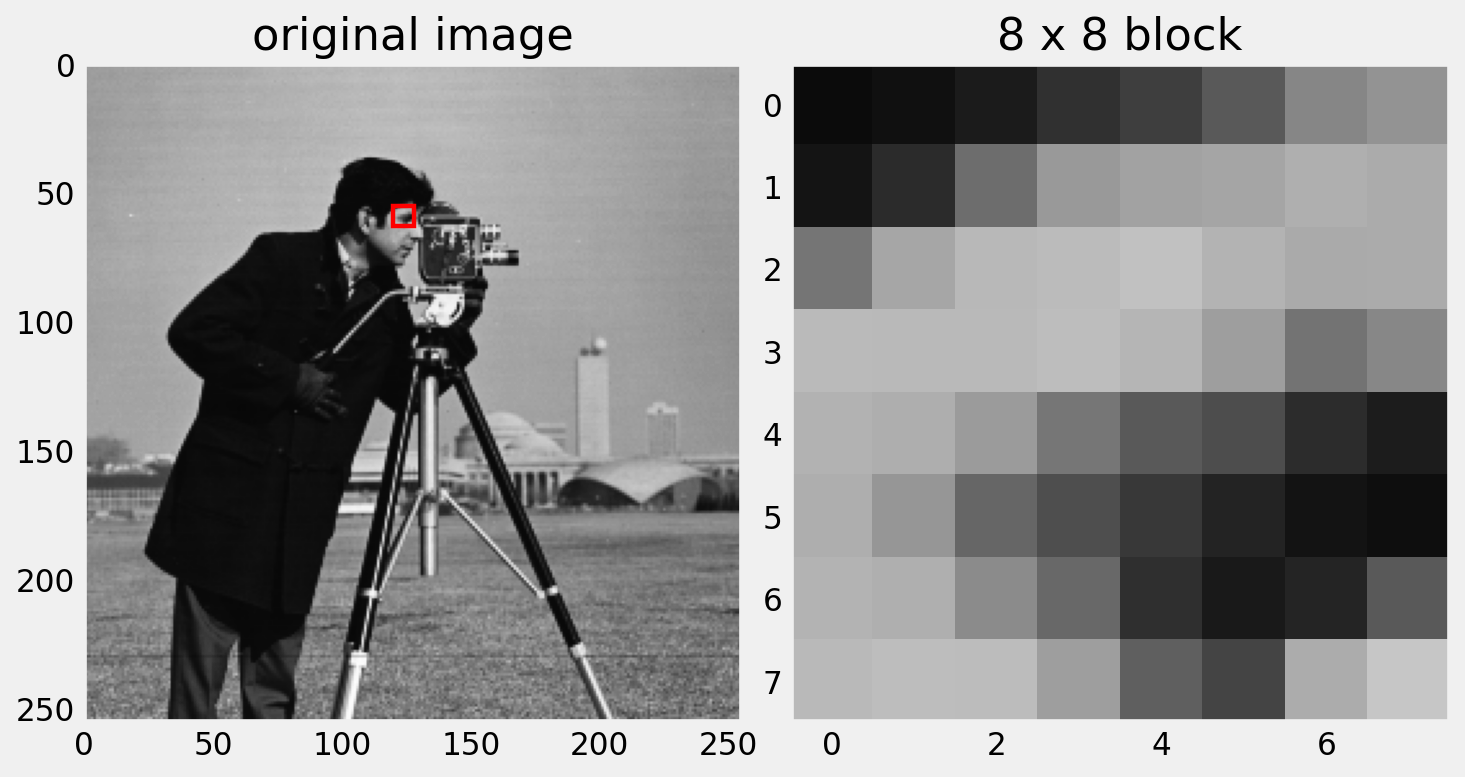

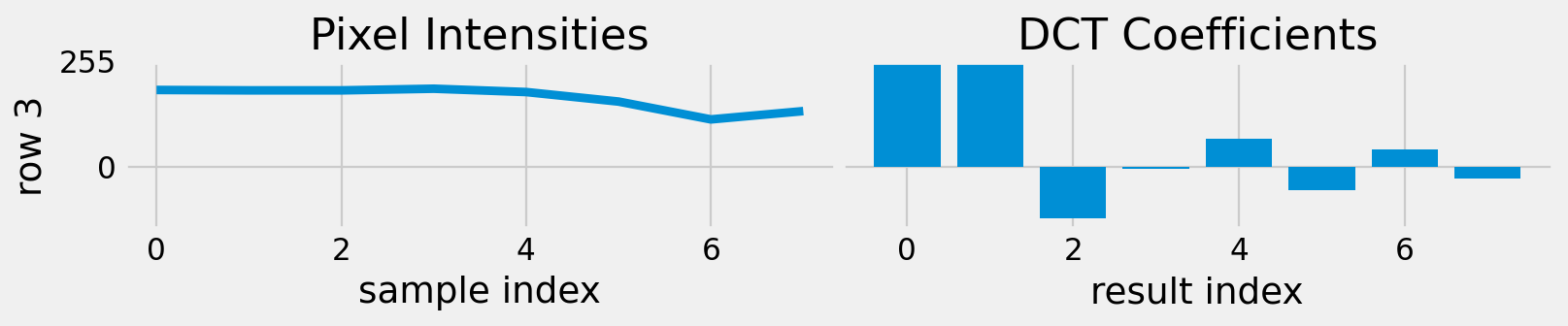

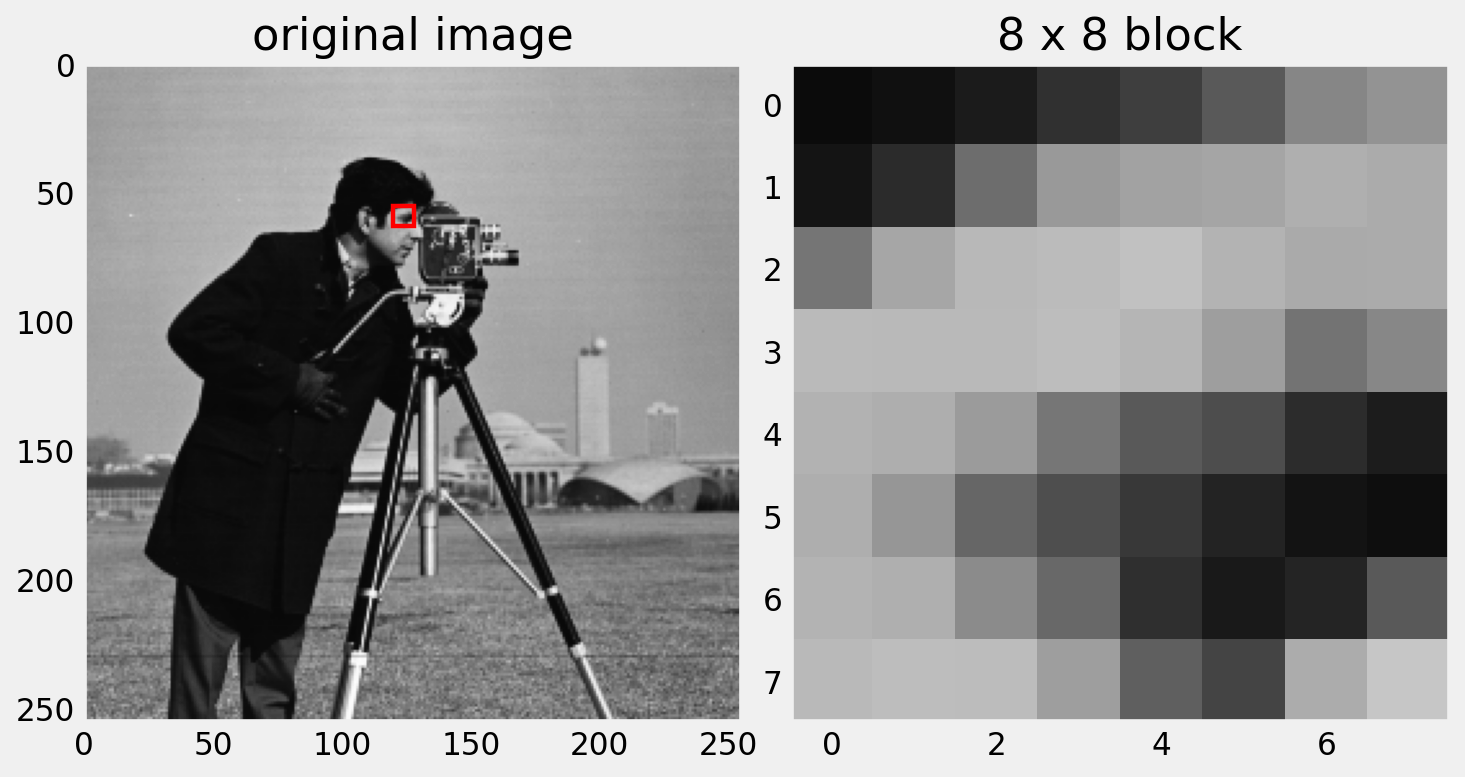

Let’s look at one 8 x 8 block in an image.

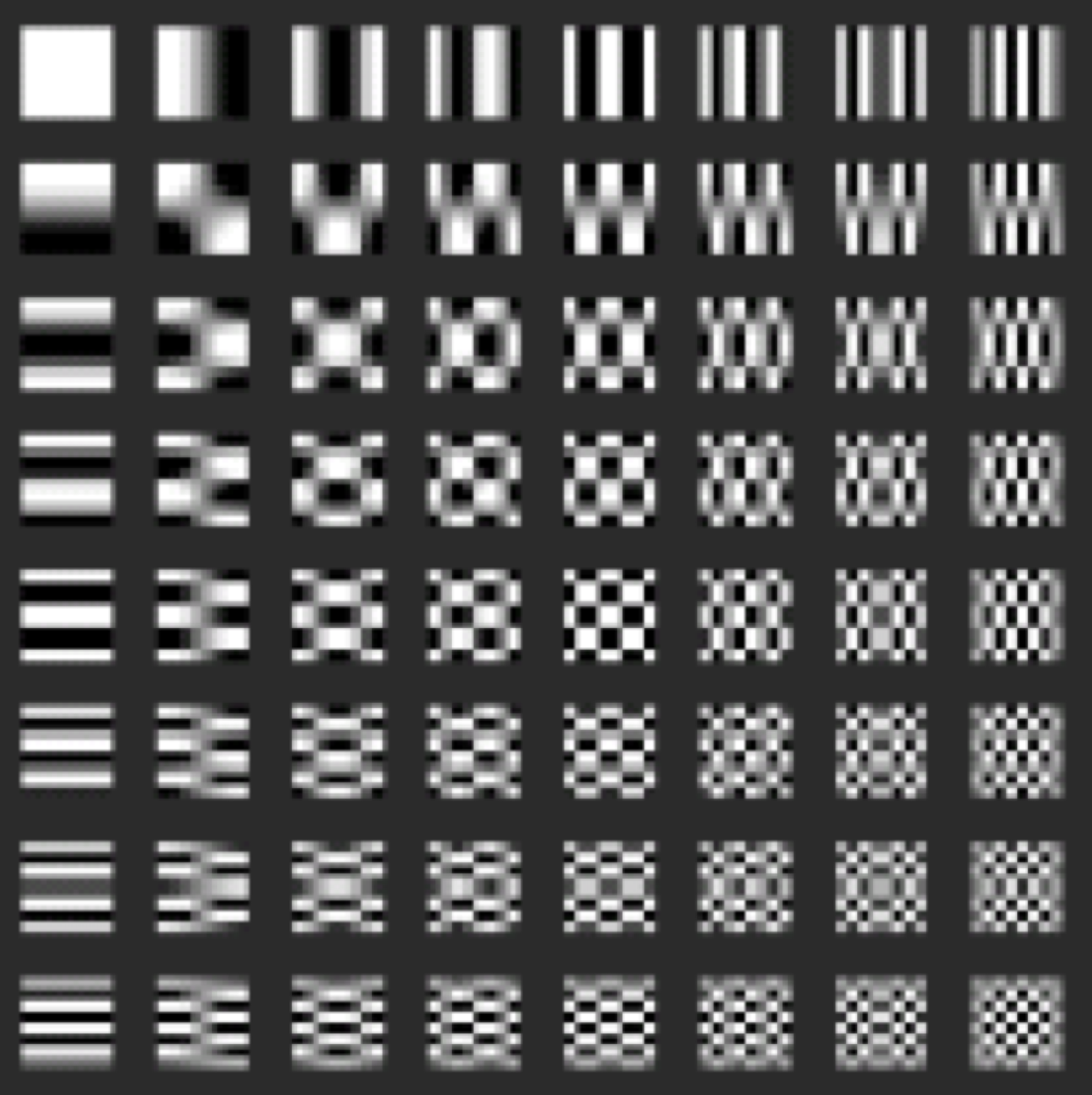

We have only considered vectors so far.

\[ X_{u, v} = s_u s_v ~ \sum_{x=0}^{N-1} \sum_{y=0}^{N-1} I(x, y) ~ cos \left[ \frac{\pi u (2x + 1)}{2N} \right] cos \left[ \frac{\pi v (2y + 1)}{2N} \right] \]

Rather than basis vectors, we have basis images.

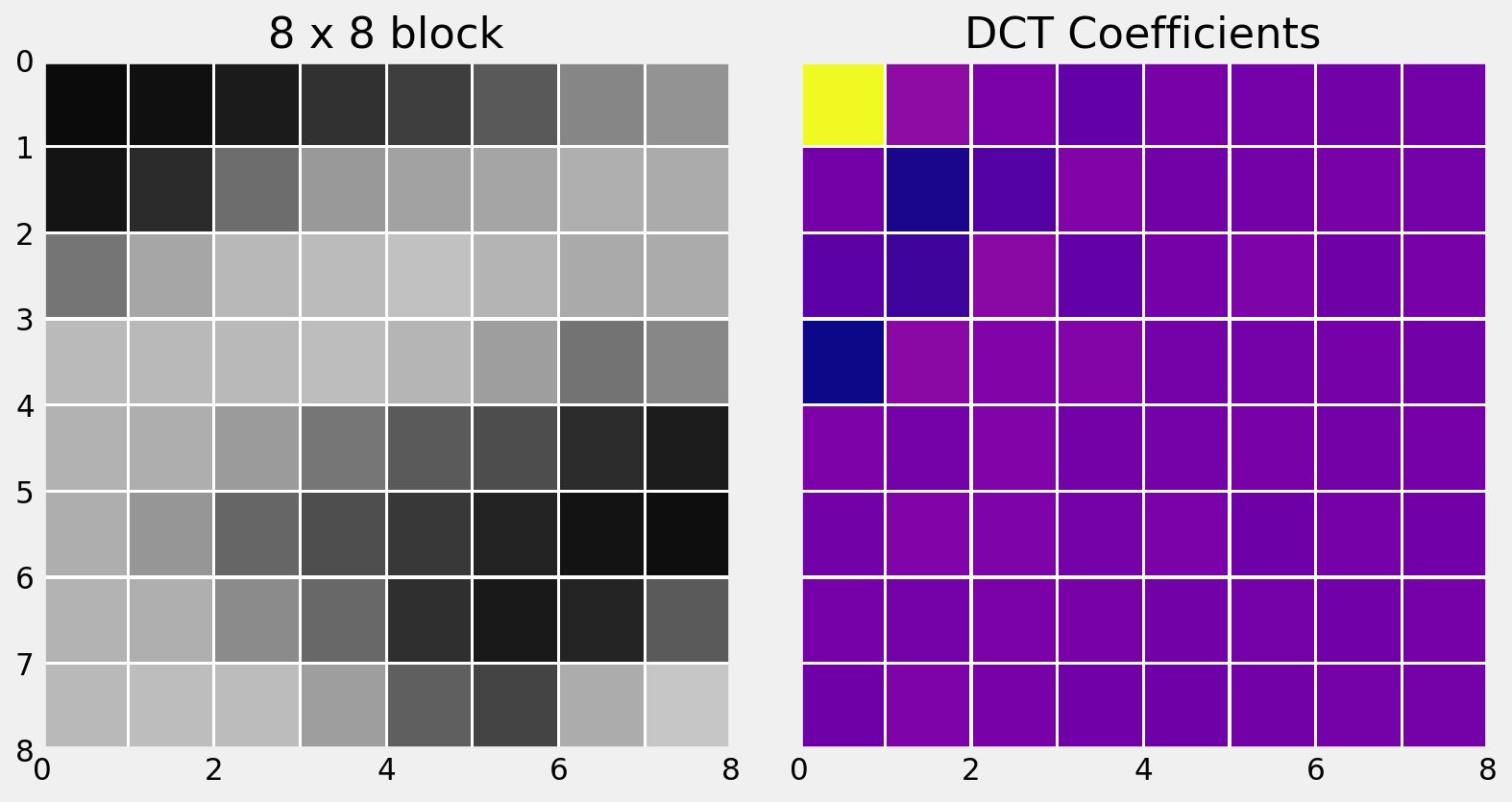

Let’s look again at the same 8 x 8 block in an image.

Here is the 2D DCT of the block.

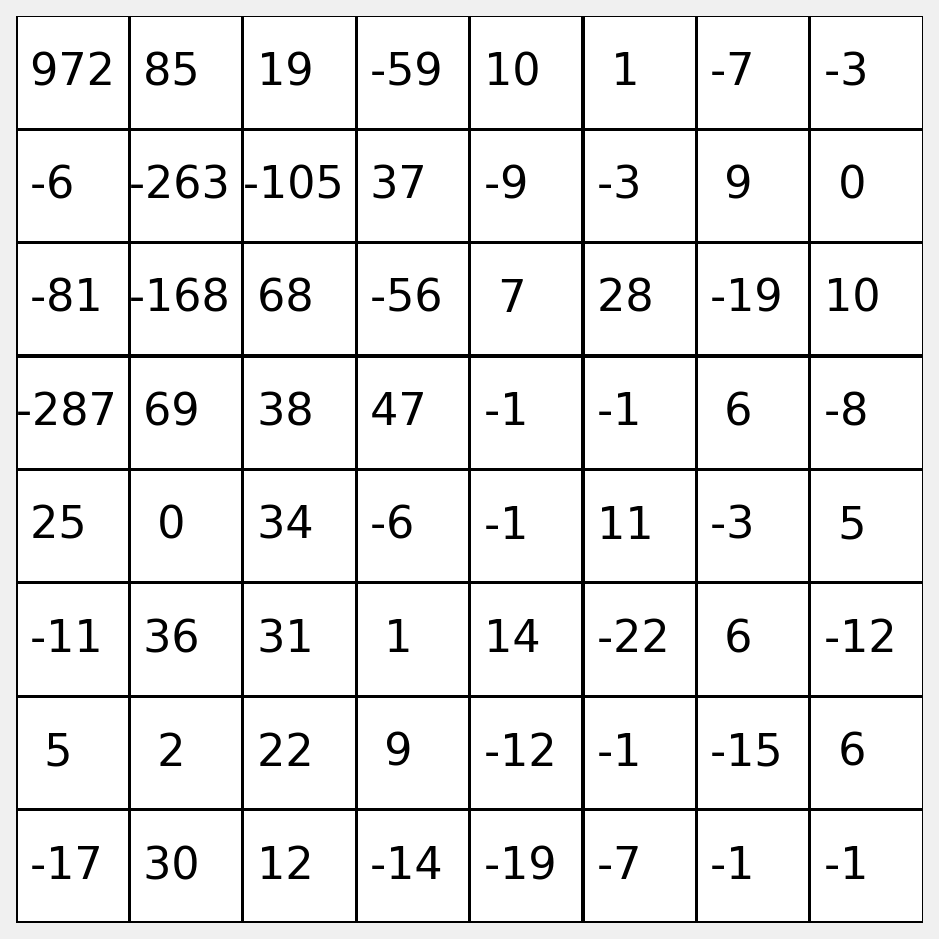

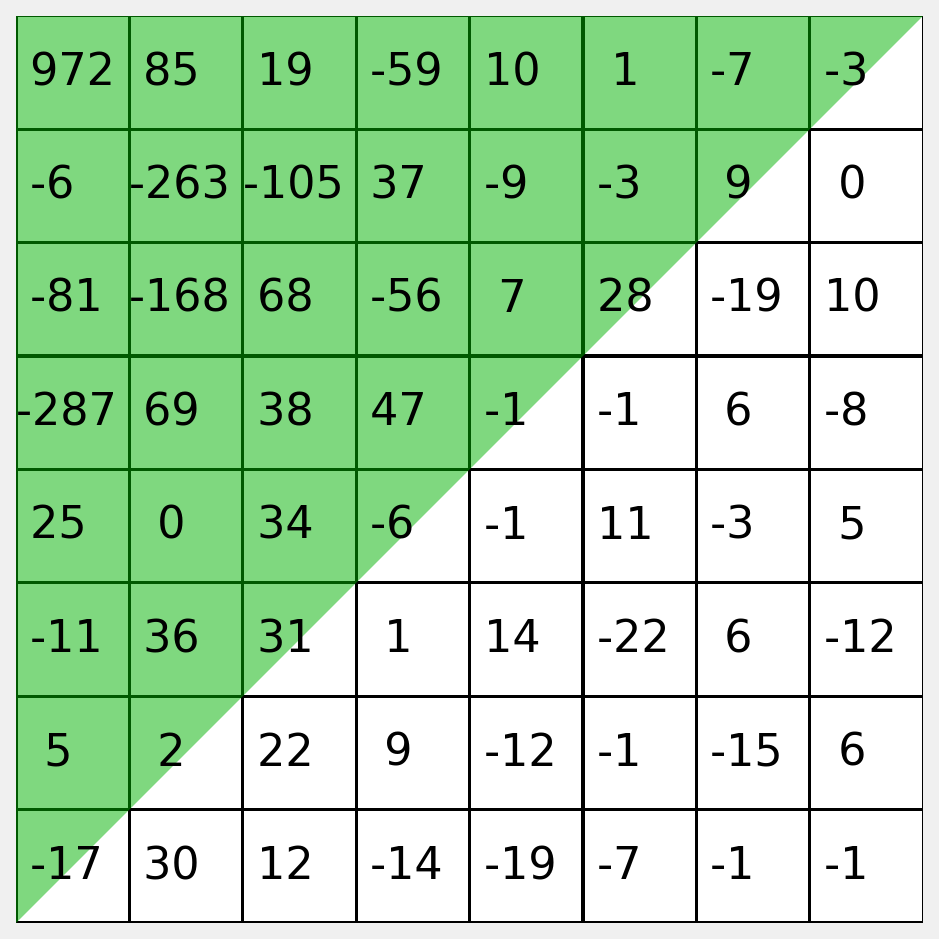

Let’s examine the actual values of the coefficients.

Notice that the most significant values congregate at the top left.

We can stack the top left values to make a feature vector.

\(f = (972, 85, 19, -59, \dots)\)

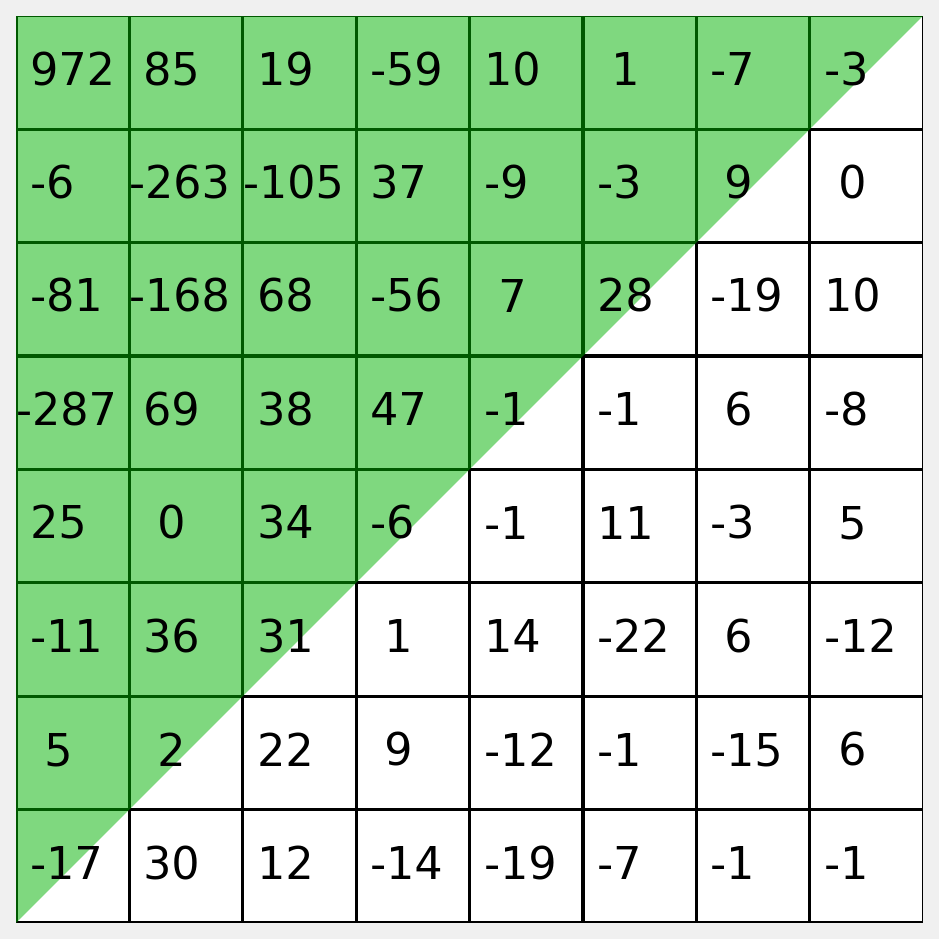

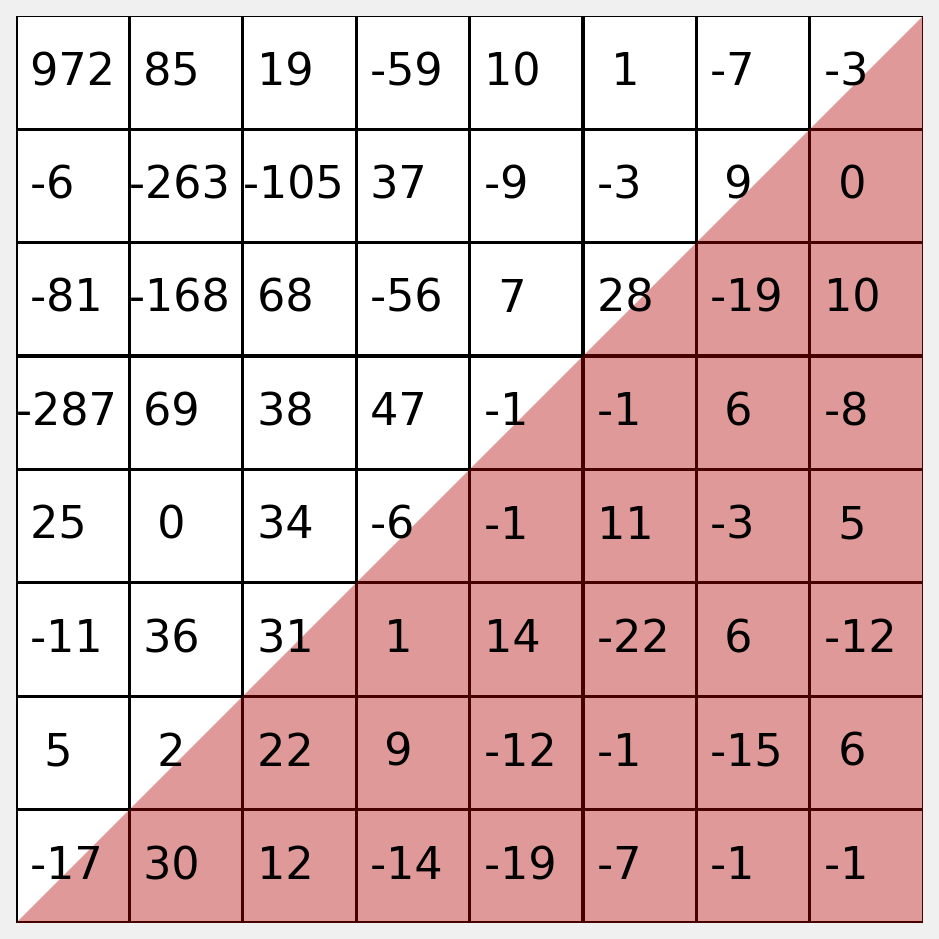

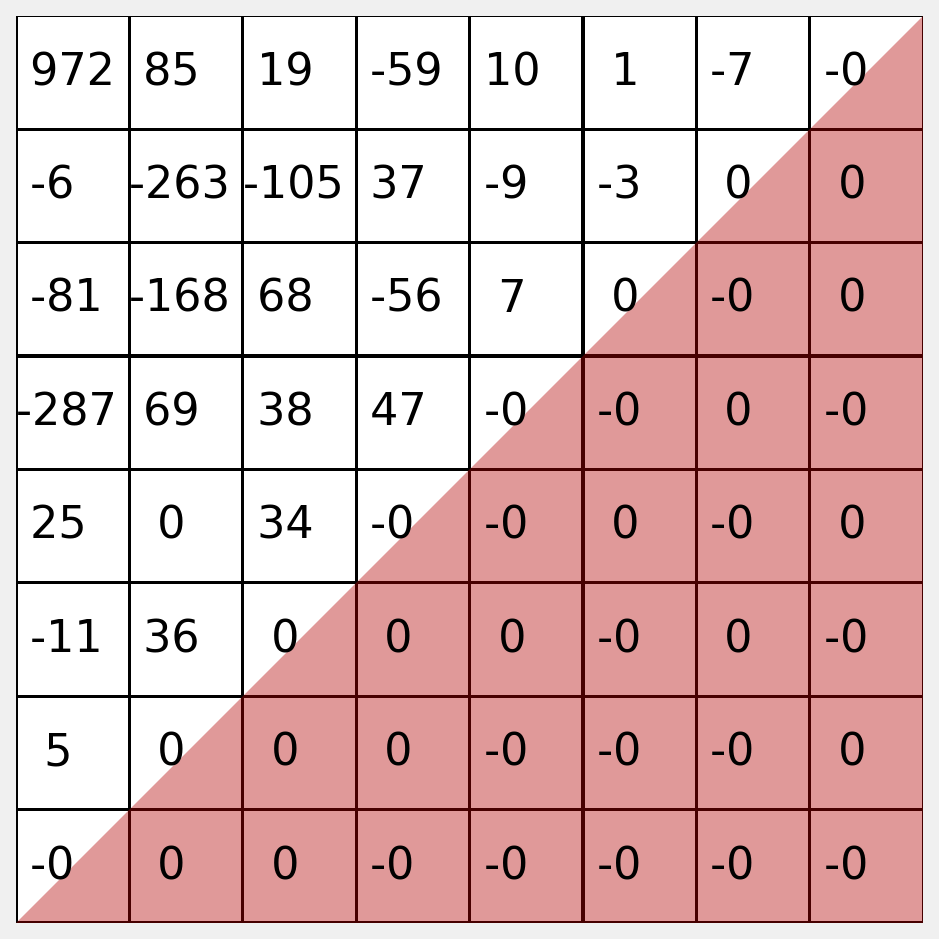

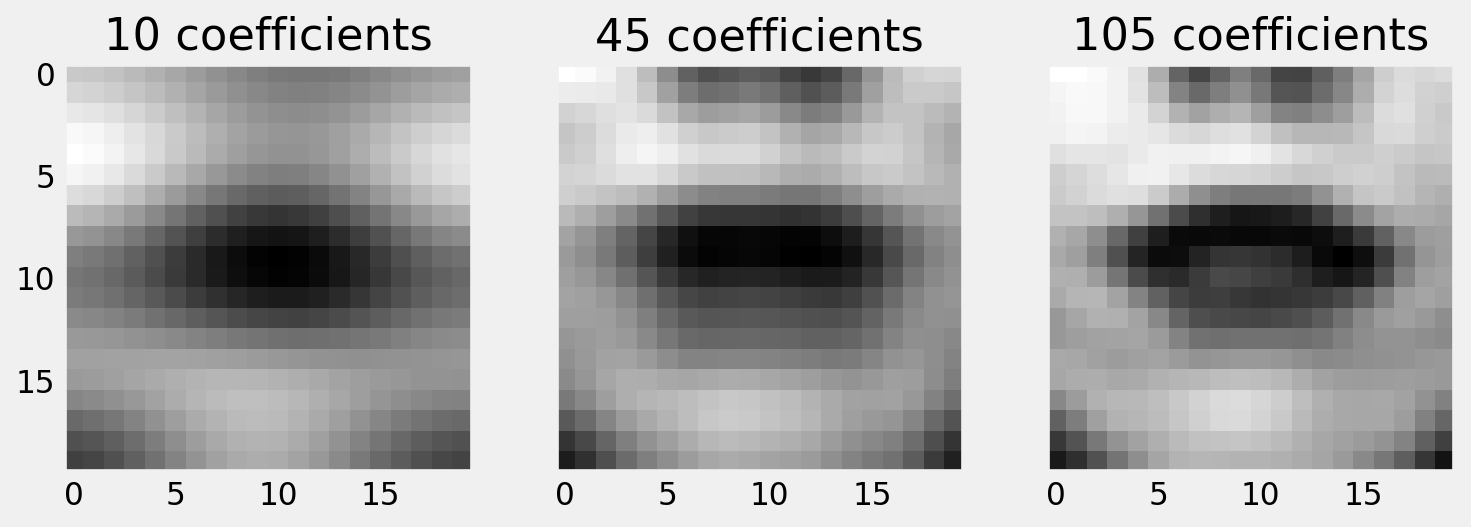

If we want to reconstruct the image using the inverse DCT, we can set the low values to zero to view the reconstruction loss.

Here you can see we have zeroed the lower right triangle.

You should decide empirically how many coefficients to retain. Often, many fewer than half produce good results.

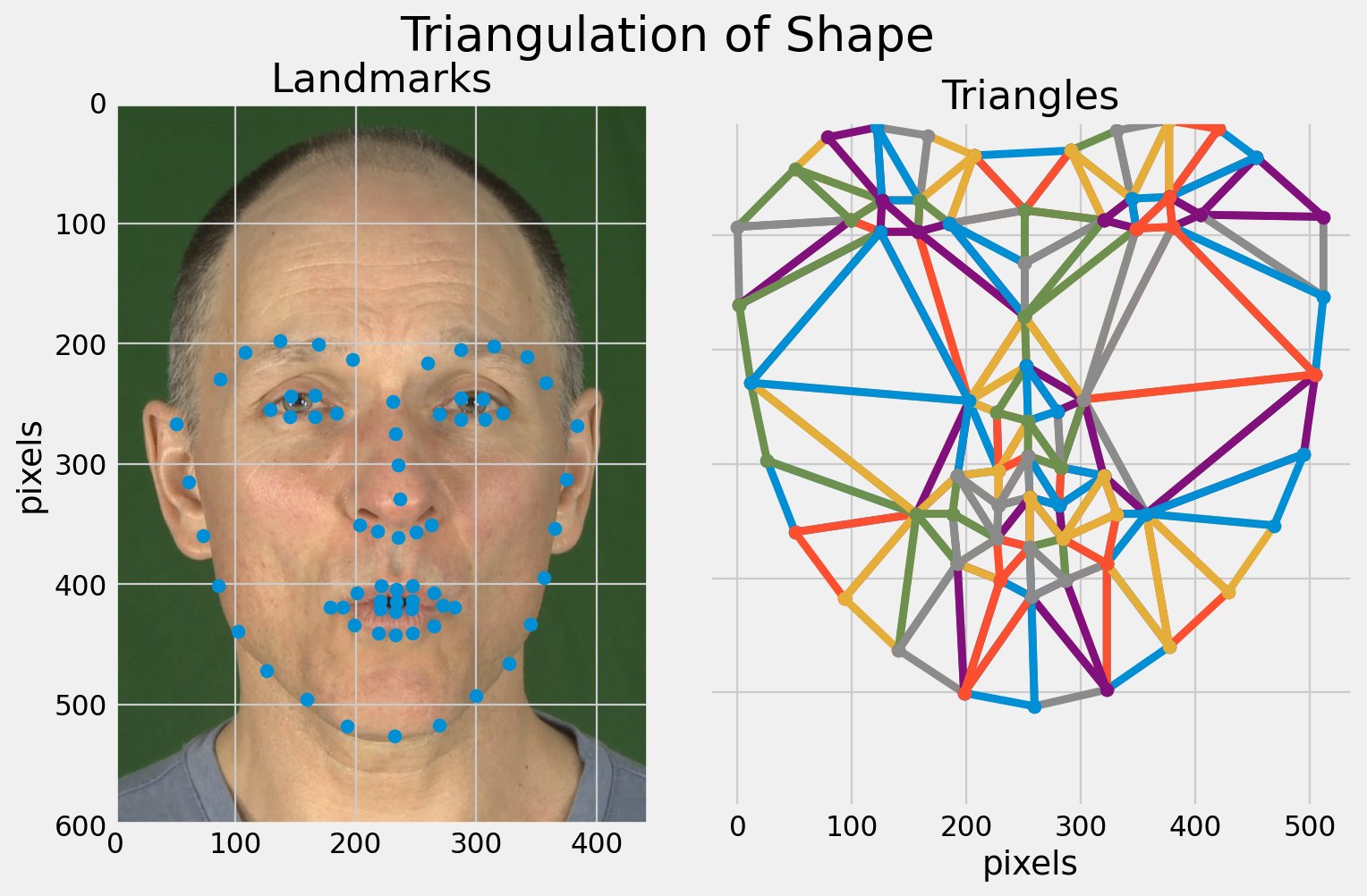

One approach for modelling the appearance of the face:

One approach for modelling the appearance of the face:

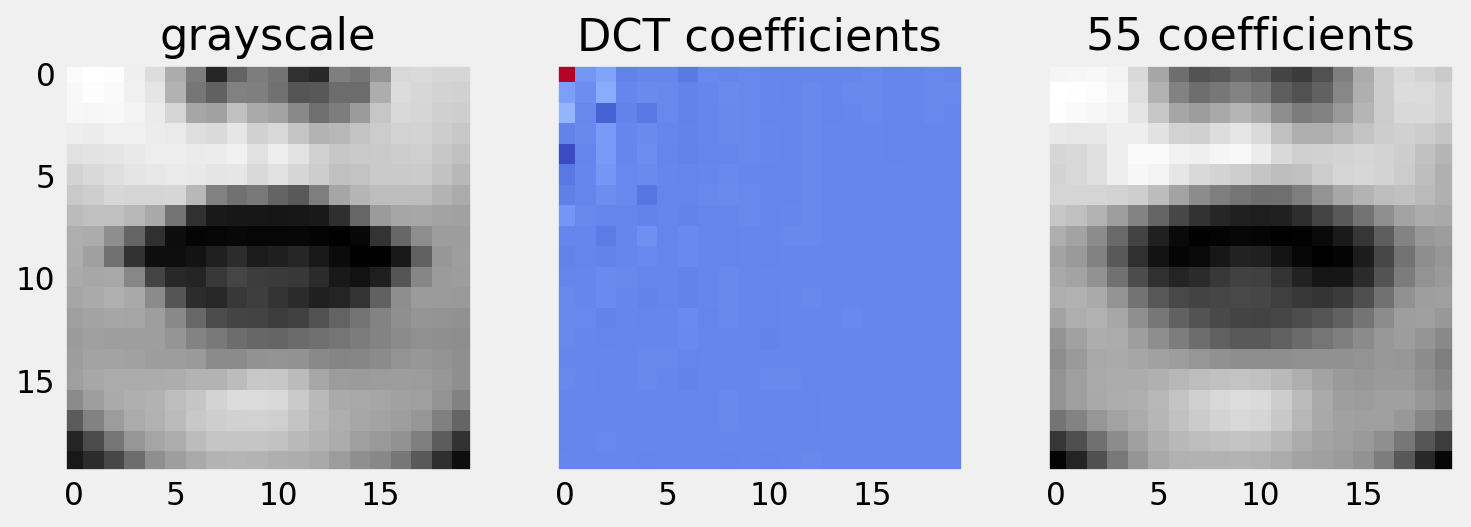

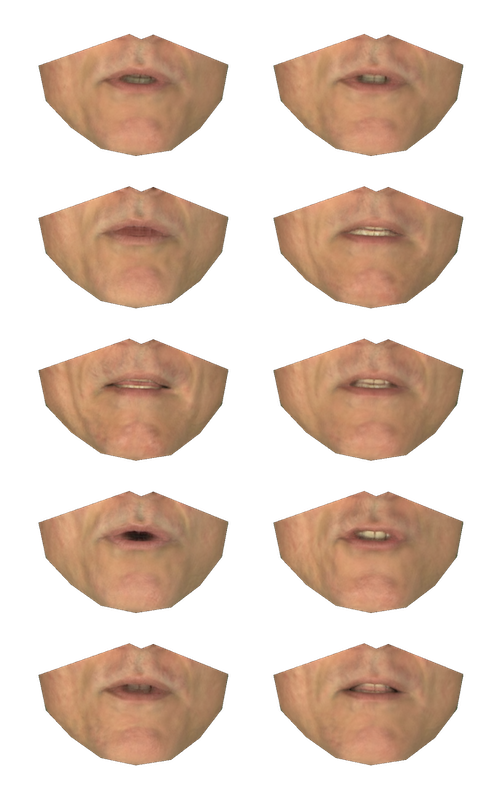

A region of interest is cropped, resized and converted to greyscale.

From the greyscale image, we can extract the DCT coefficients. We retain only the low frequency coefficients, and show a reconstruction of the image.

Perceptual evaluations of the reconstruction are informative, but your experiments should determine how useful the features are for recognising speech.

Recall, to reconstruct using PCA:

\[\mathbf{x} \approx \mathbf{\overline x} + \mathbf{P} \mathbf{b}\]

A human face can be approximated from the mean shape plus a linear combination of the eigenfaces.

There is problem with the Eigenface approach:

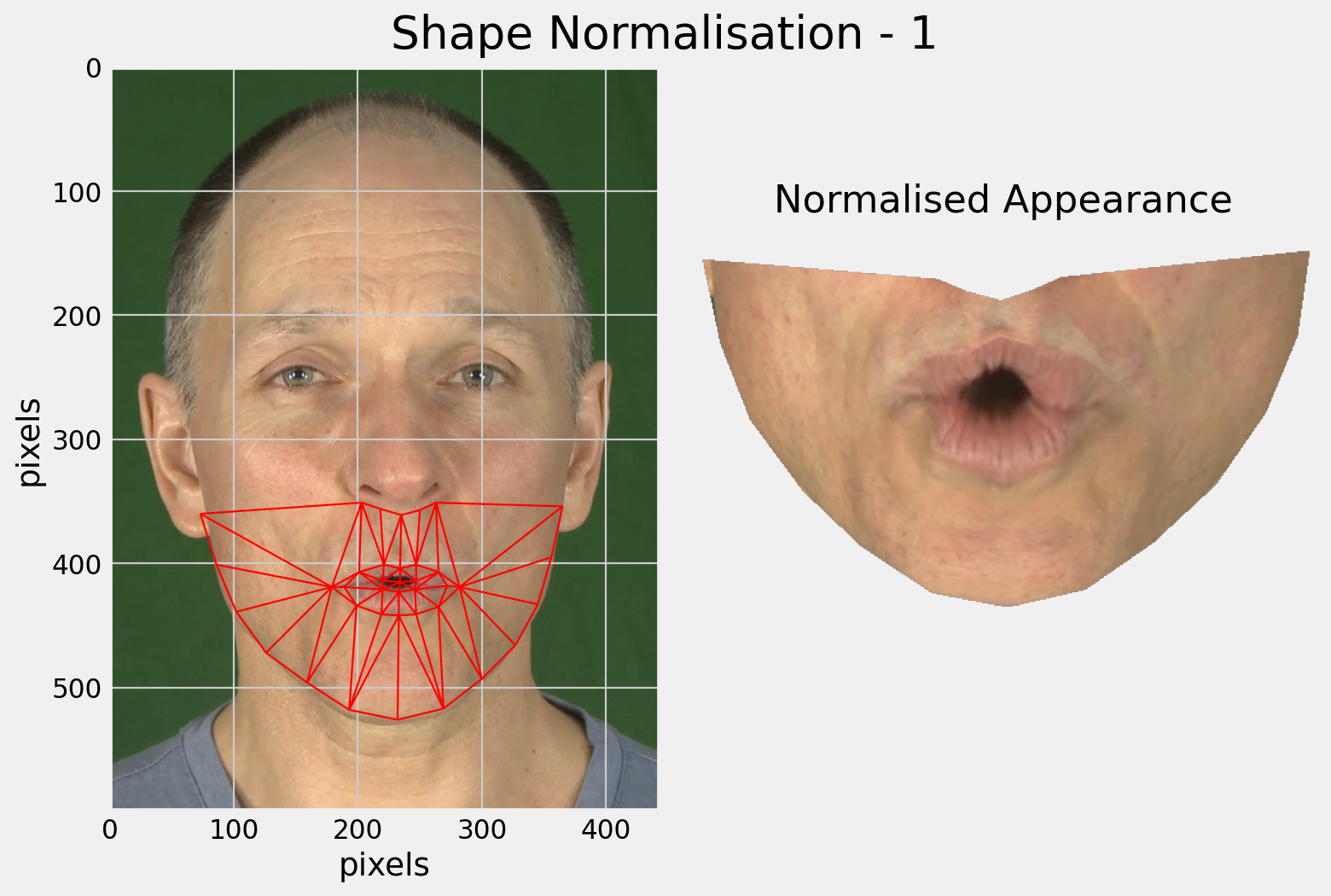

We should model only the appearance variation.

A PDM is already able to model the shape.

Each pixel should represent the same feature.

We can’t achieve this goal by merely normalising the crop.

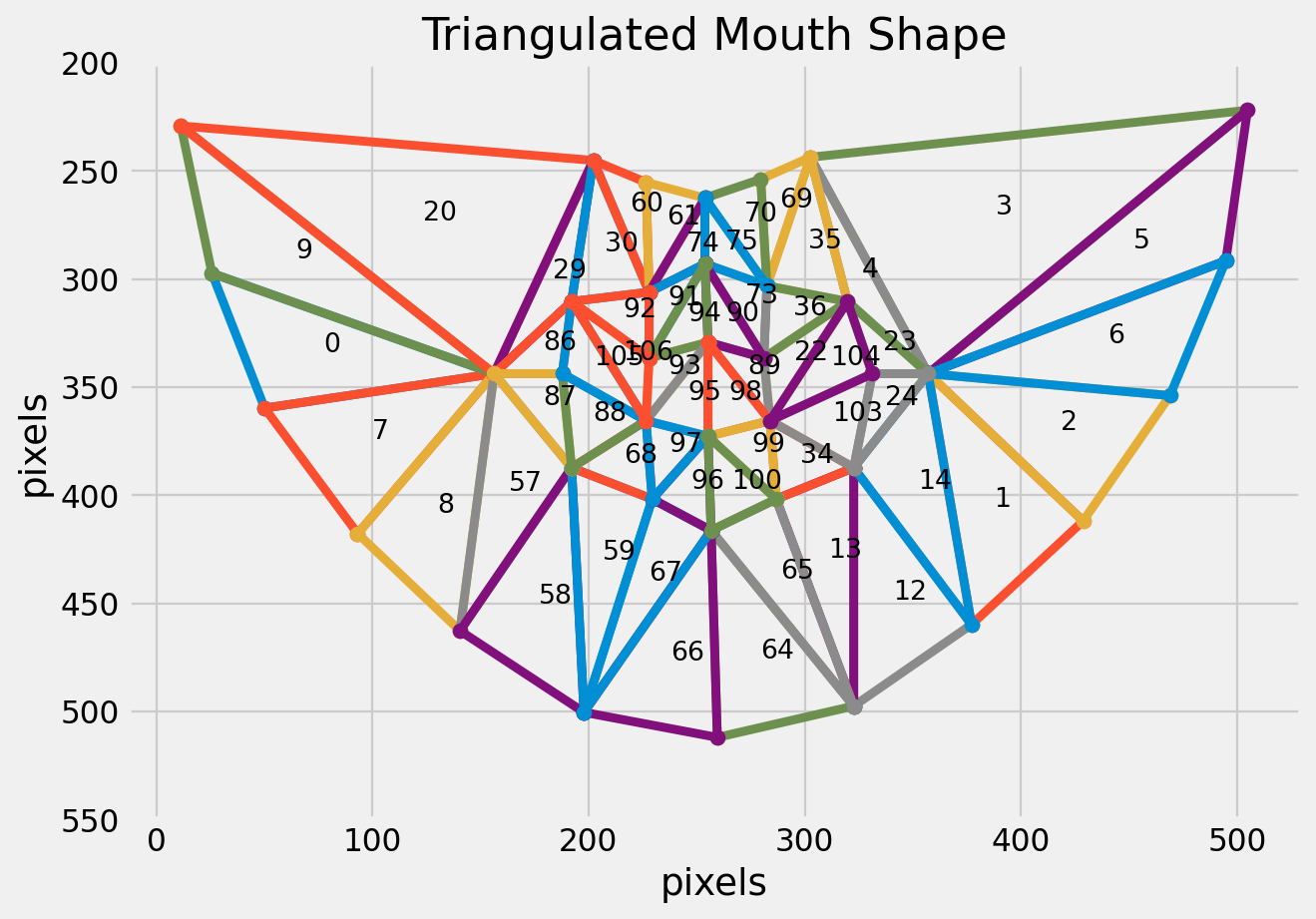

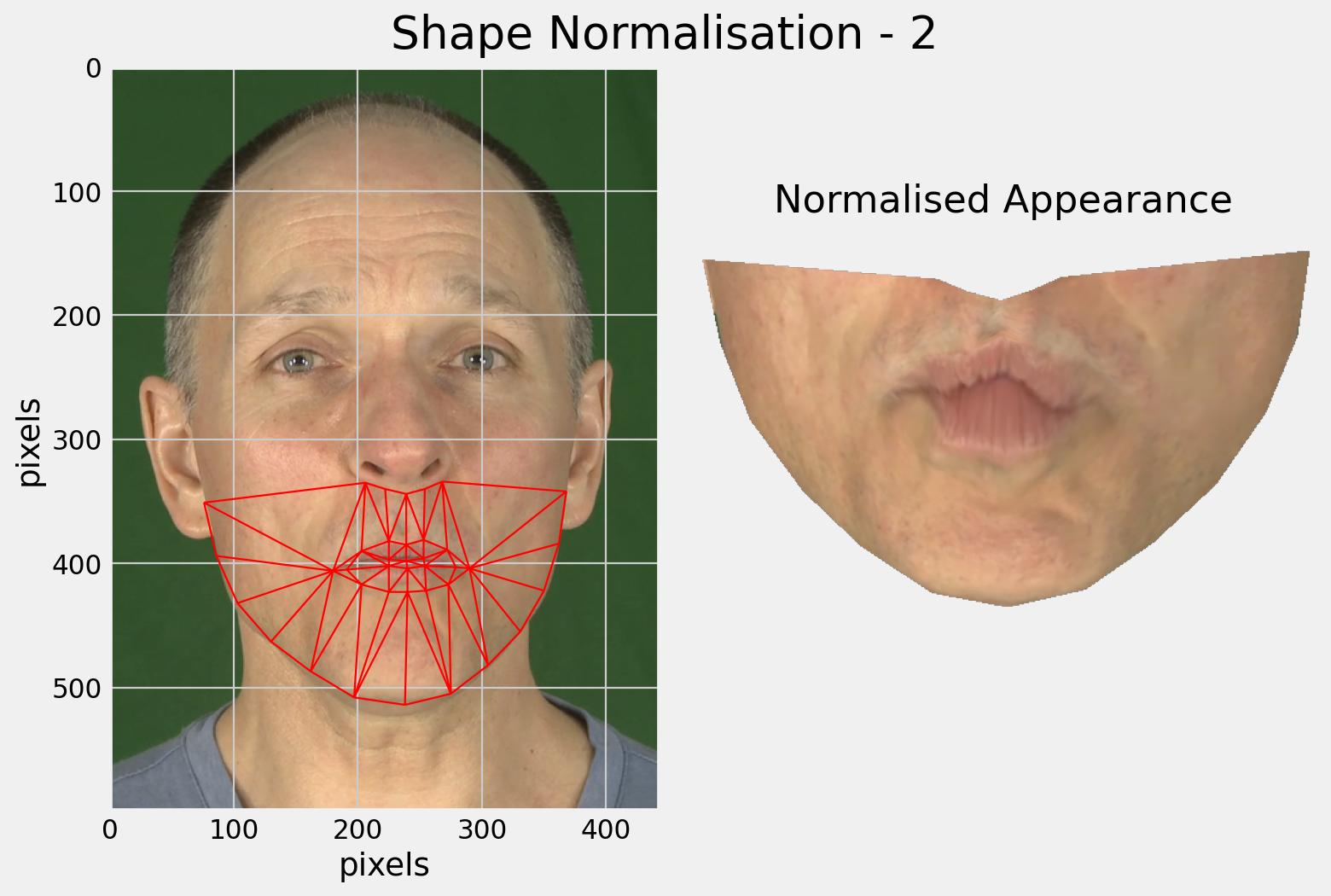

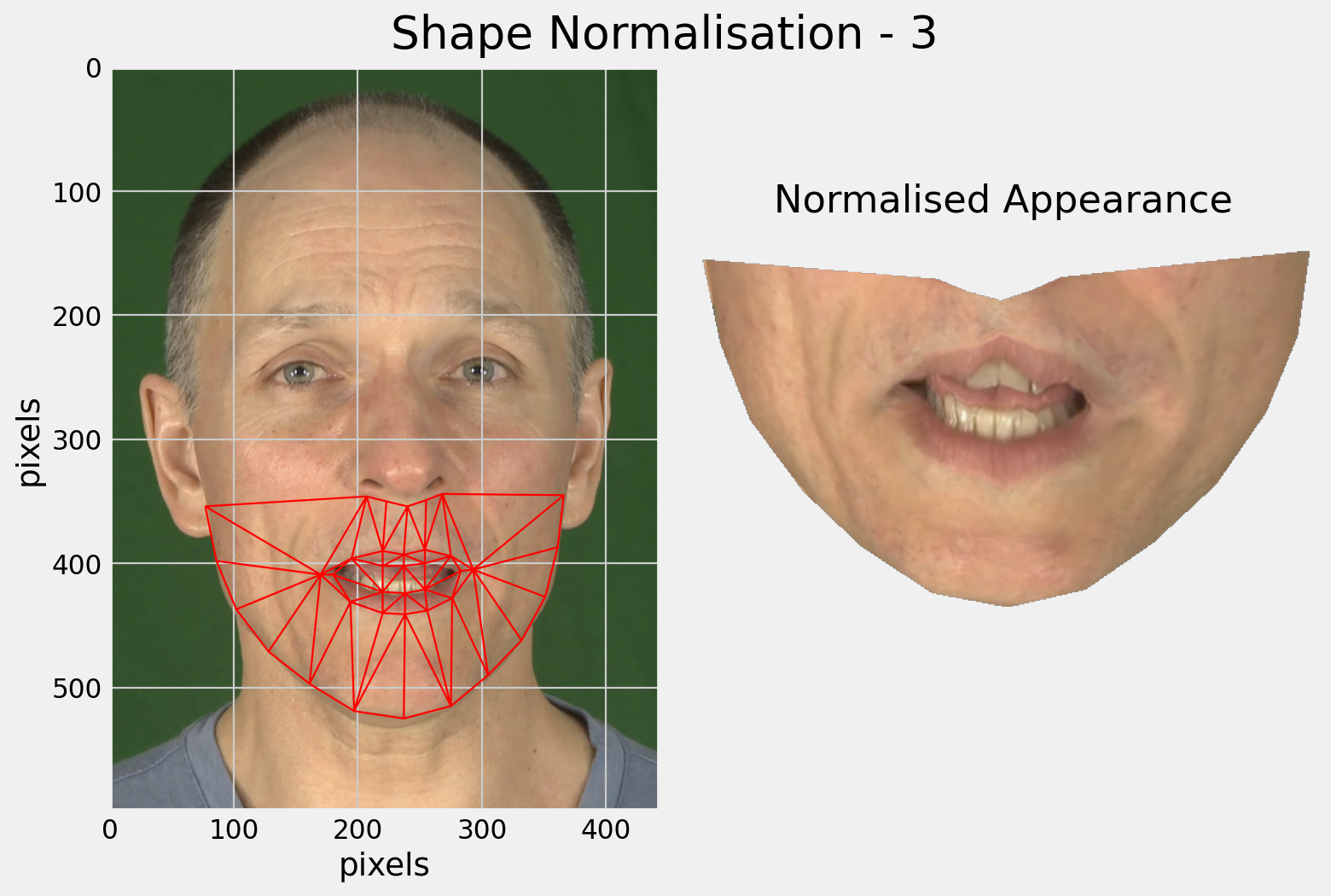

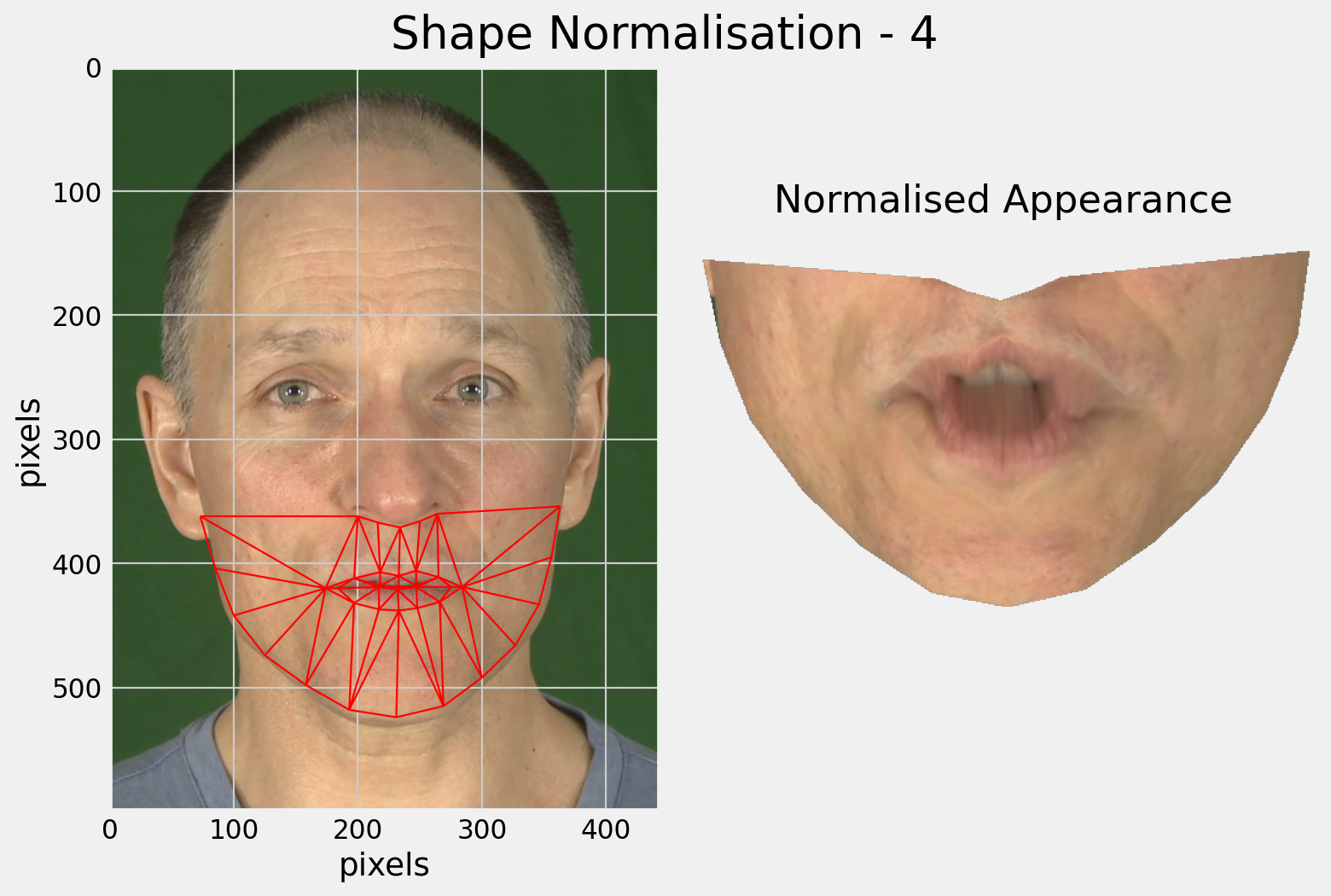

Given the hand-labelled images that were used to build the PDM, warp the images from the hand labels to the mean shape.

There are many ways to perform the warp, e.g.:

For each image in the training data, and for each triangle:

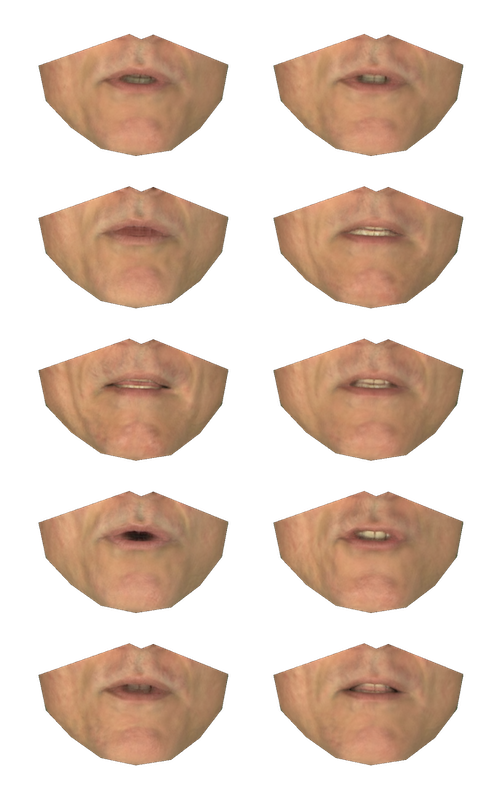

Images are shape-normalised.

Applying PCA to the shape-normalised images gives a better model of appearance.

Potamianos et al. (1998) compared visual features for automatic lip-reading.

| Feature Class | Feature | Accuracy |

|---|---|---|

| Articulatory | Height and Width | 55.8% |

| and Area | 61.9% | |

| and Perimeter | 64.7% | |

| Fourier Descriptors | Outer Lip Contour | 73.4% |

| Inner Lip Contour | 64.0% | |

| Both Contours | 83.9% | |

| Appearance | LDA - based features | 97.0% |

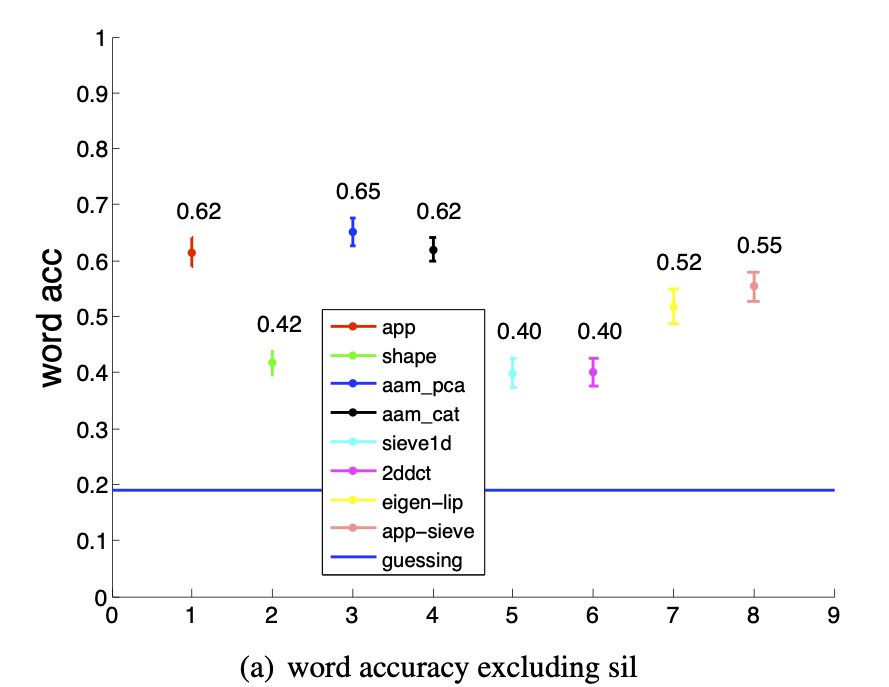

Lan et al. (2010) compared visual features for automatic lip-reading.

The acoustic and visual information needs to be combined - how and where this happens is important.

Two strategies:

Usually an estimation of the respective confidence is required.

Advantage of early integration:

Disadvantages of early integration:

Advantages of late integration:

Disadvantages of late integration:

Integrating visual information can improve the robustness of speech recognisers to acoustic noise.

Face encoding using: