Content

- 2D Convolutions

- Smoothing Filters

- Sharpening and Unsharp Masking

- Template Matching

Audiovisual Processing CMP-6026A

Dr. David Greenwood

Filtering replaces each pixel with a value based on some function performed on it’s local neighbourhood.

Used for smoothing and sharpening…

Estimating gradients…

Removing noise…

Linear Filtering is defined as a convolution.

This is a sum of products between an image region and a kernel matrix:

\[g(i, j) = \sum_{m=-a}^{a}\sum_{n=-b}^{b} f(i - m, j - n) h(m, n)\]

where \(g\) is the filtered image, \(f\) is the original image, \(h\) is the kernel, and \(i\) and \(j\) are the image coordinates.

Typically:

\[a=\lfloor \frac{h_{rows}}{2} \rfloor, ~ b=\lfloor \frac{h_{cols}}{2} \rfloor\]

So for a 3x3 kernel:

\[ \text{both } m, n = -1, 0, 1\]

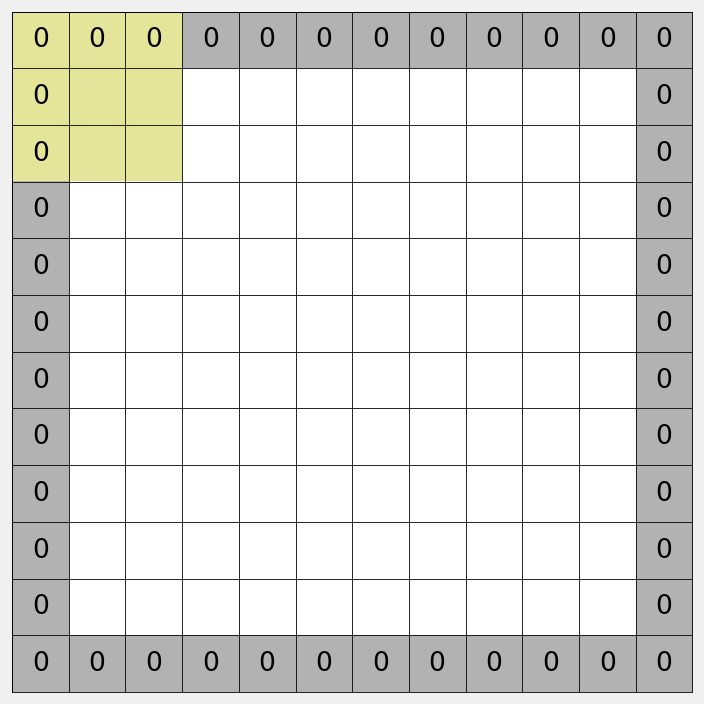

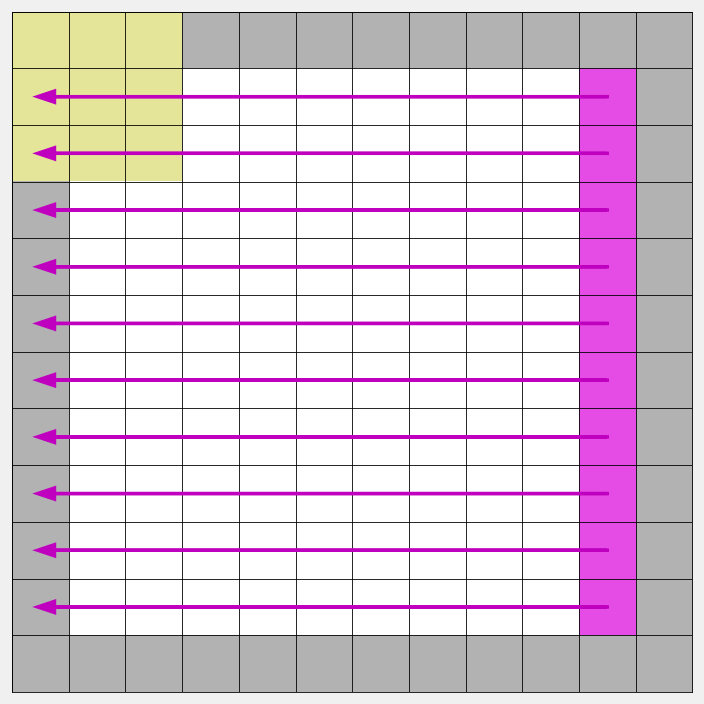

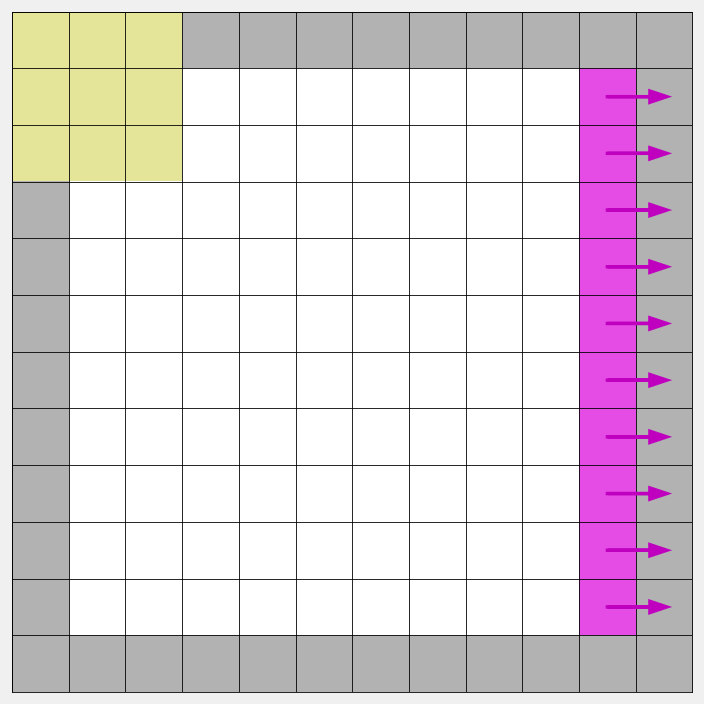

Scan image with a sub-window centred at each pixel.

Replace the pixel with the sum of products between the kernel coefficients and all of the pixels beneath the kernel.

Slide the kernel so it’s centred on the next pixel and repeat for all pixels in the image.

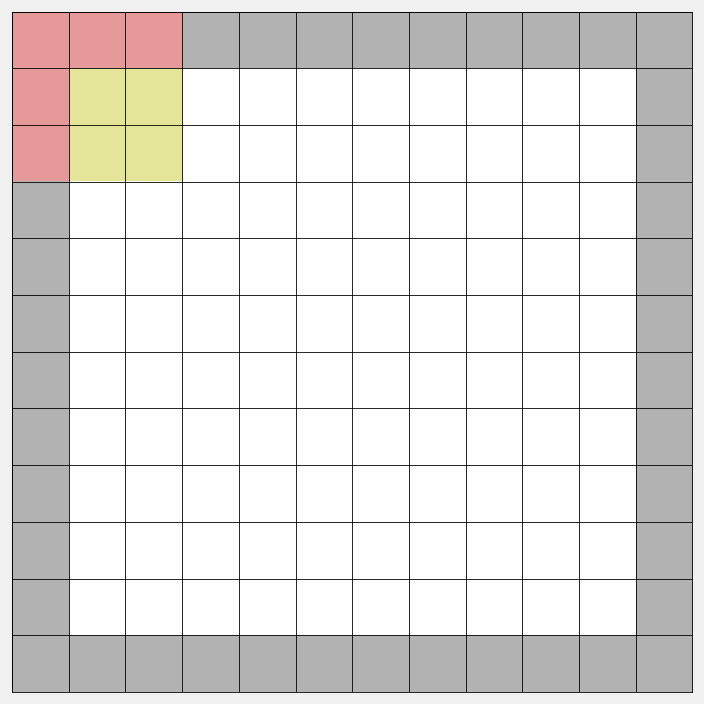

The filter window falls off the edge of the image.

A common strategy is to pad with zeros.

The image is effectively larger than the original.

We could wrap the pixels, from each edge to the opposite.

Again, the image is effectively larger.

Alternatively, we could repeat the pixels, extending each edge outward.

What would the filtered image look like?

No change!

What would the filtered image look like?

Shifted left by 1 pixel.

What would the filtered image look like?

Blurred…

replace each pixel with the mean of local neighbours:

\[ h = \frac{1}{9}\times \begin{bmatrix} 1 & 1 & 1 \\ 1 & 1 & 1 \\ 1 & 1 & 1 \end{bmatrix} \]

we can increase the size of the kernel to get a smoother image:

\[ h = \frac{1}{25}\times \begin{bmatrix} 1 & 1 & 1 & 1 & 1 \\ 1 & 1 & 1 & 1 & 1 \\ 1 & 1 & 1 & 1 & 1 \\ 1 & 1 & 1 & 1 & 1 \\ 1 & 1 & 1 & 1 & 1 \end{bmatrix} \]

Similar to mean filter:

\[ g(x,y) = \frac{1}{2\pi\sigma^2}~ {\rm e}^{ - \frac{x^2+y^2}{2\sigma^2} } \]

Smoothing effectively low pass filters the image.

If we have many images of the same scene:

What would the filtered image look like?

We can control the amount of sharpening:

\[ h_{sharp} = \begin{bmatrix} 0 & 0 & 0\\ 0 & 1 & 0\\ 0 & 0 & 0 \end{bmatrix} + \begin{bmatrix} 0 & -1 & 0\\ -1 & 4 & -1\\ 0 & -1 & 0 \end{bmatrix} * amount \]

There is a nice interactive tool to view kernel operations here: https://setosa.io/ev/image-kernels/

The ImageMagick documentation has a nice list of kernels: https://legacy.imagemagick.org/Usage/convolve/

A high pass filter formed from a low pass filtered image.

Low pass filter removes high-frequency detail.

The sharpened image is the original image plus the unsharp mask multiplied by some factor.

Generally don’t want to boost all fine detail as noise would also be enhanced.

2D Convolution can be thought of as comparing a little picture (the filter kernel) against all local regions in the image.

If the filter kernel contains a picture of something you want to locate inside the image (a template), the filter response should be maximised at the local region that most closely matches it.

Algorithm: